Dear FLUKA experts,

Question: Is there a way to rerun a simulation such that the output is identical (e.g. by forcing the random seed to a preset value)?

More info: I am investigating how different settings along the beamline may influence the radiation downstream, while keeping the settings identical upstream. To reduce possible sources of inconsistencies from statistics, I would like to run the simulation with the same initial seed such that the radiation before my changes is identical and it only changes when I also change the settings, thereby isolating the impact/consequences of the changes I make.

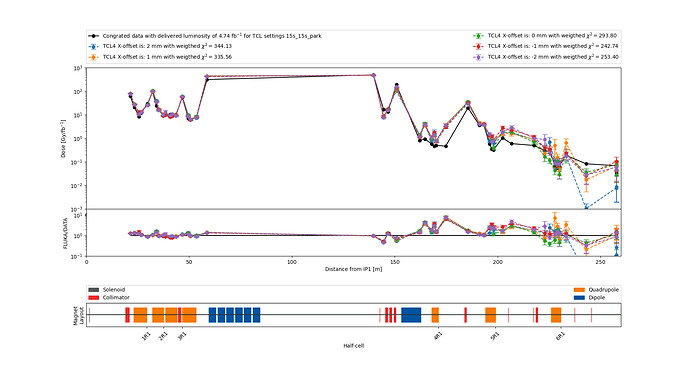

For example, look at the graph below, where I run a simulation with just one difference in the settings at ~150 m for 5 different values. Therefore, I would have expected (wished for) identical (and I truly mean identical, not just in agreement with each other) results before 150 m and changes only afterwards.

Each of these 5 different simulations were run on a cluster with 50 jobs, where the seed was set from N=1 to 50

RANDOMIZ 1.0 N.0

with 20 cycles and 100 primaries each.

Is such a procedure possible, are there counter-arguments against it?

Thank you so much for your time!

Best,

Daniel