Dear colleagues,

in my simulation I have an inhomogeneous hadronic calorimeter, made of different layers, each layer being divided in a scintillator part and an absorber part. I modeled quite accurately the first layer, then I make use of the LATTICE feature to replicate it.

After simulating ~ 10k primaries, the simulation crashed with the following error:

Geofar: Particle in region 9 (cell # 20)

in position -2.706968472E+01 2.306588177E+01 4.151000208E+02

is now causing trouble, requesting a step of 4.821616320E+00 cm

to direction 3.129836027E-01 9.497585294E-01 -1.808674501E-05

end position -2.556059787E+01 2.764525300E+01 4.150999336E+02

R2: 3.556406519E+01 R3: 4.166207268E+02 cm error count: 0

X*U (2D): 1.343465050E+01 X*U (3D): 1.342714269E+01 cm

X*UOLD(2D): 1.736273063E+01 X*UOLD(3D): -2.990530501E+00 cm

Kloop: 848497963, Irsave: 9, Irsav2: 12, error code: -33 Nfrom: 5000

old direction 1.935300256E-01 9.798683452E-01 -4.903218527E-02, lagain, lstnew, lsense, lsnsct F F F F

Particle index 7 total energy 1.440058354E-03 GeV Nsurf 0

Try again to establish the current region moving the particle of a 1.218815943E-08 long step

We succeeded in saving the particle: current region is n. 9 (cell # 20)

Geofar: Particle in region 9 (cell # 20)

...

...

Abort called from FLKAG1 reason TOO MANY ERRORS IN GEOMETRY Run stopped!

STOP TOO MANY ERRORS IN GEOMETRY

Indeed, the point ( -2.706968472E+01, 2.306588177E+01, 4.151000208E+02) where the error is reported is indeed at the interface between two lattice regions.

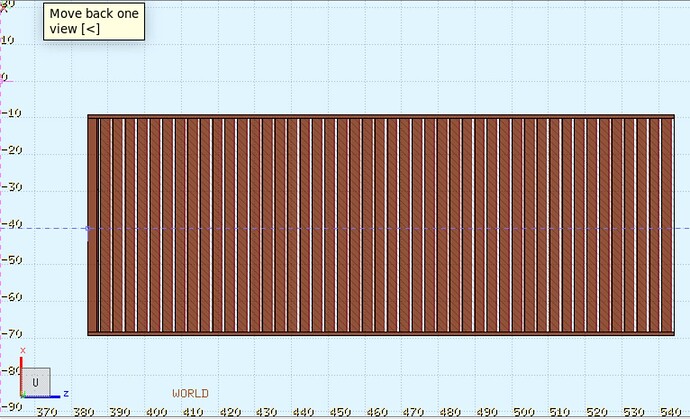

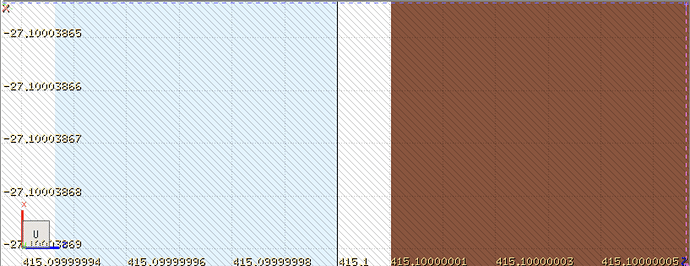

I tried to use FLAIR to check if any error was there, and after zooming it (a lot!) in that region, I saw something strange, as reported below.

Zoom:

As you see, there is a white region between Z=415.1 cm and Z=415.1+1E-8 cm, that I cannot understand.

I attach here the input file that I am currently using:

na64_2022B_h_50GeV.inp (324.0 KB)

I am currently using FLUKA 4.3.3 - I’ll soon update to the newest FLUKA version, but it seems to me, looking at the changelog, that this is not related to the version upgrade (maybe I am wrong?)

Thanks,

Bests,

Andrea